Are you facing challenges with optimization in artificial intelligence, often getting stuck in less-than-ideal solutions? You’re not alone. Many developers and data scientists encounter similar obstacles when striving for both efficiency and accuracy. Hill climbing in AI offers a practical, local search-based approach that iteratively improves solutions to reach better outcomes. In this guide, you’ll discover how the algorithm works, explore its types like steepest ascent and stochastic methods, and see how it's applied in real-world scenarios such as route planning and scheduling. We’ll also cover strategies to handle limitations like local maxima and introduce enhancements like simulated annealing to boost performance.

What is Hill Climbing in AI?

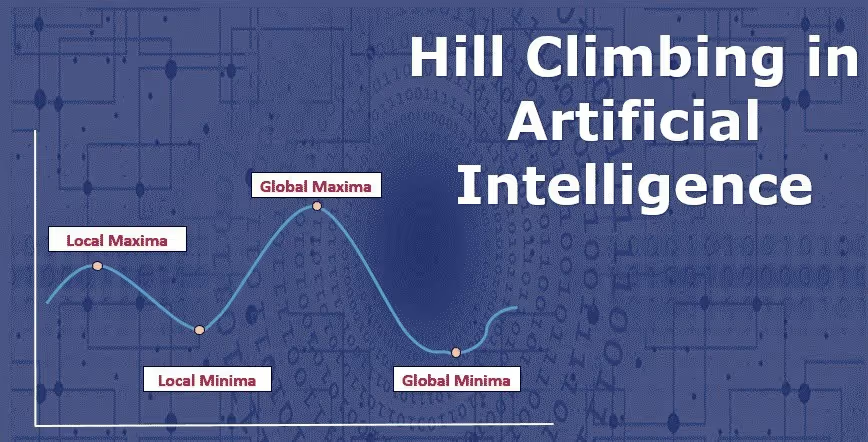

Hill climbing is a local search algorithm designed to find the best possible solution to a problem by iteratively improving an initial solution. Imagine hiking up a hill: you take small steps upward until you reach the peak (the optimal solution). While simple, this method is highly effective for problems where exhaustive search is impractical.

Key Characteristics:

- Greedy Approach: Always moves toward a higher-value neighbor.

- Single-Point Search: Operates on one candidate solution at a time.

- Heuristic-Driven: Uses an objective function to evaluate progress.

How Hill Climbing Works: A Step-by-Step Breakdown

- Generate Initial Solution: Start with a random or heuristic (any approach to problem solving that employs a pragmatic method) based state.

- Evaluate the New State: Use an objective function to measure quality.

- Apply Operators: Modify the current state using predefined rules (e.g., swapping cities in the traveling salesman problem).

- Compare and Update: If the new state is better, it becomes the current state.

- Terminate: Stop when no better states exist (goal state return success) or a stopping condition is met.

Types of Hill Climbing in AI

1. Steepest Ascent Hill Climbing

This variant evaluates all neighboring states and selects the best improvement—ideal for problems with small search spaces.

Pros:

- Guarantees finding the local maximum.

- Systematic exploration.

Cons:

- Computationally expensive for large datasets.

2. Stochastic Hill Climbing

Randomly selects a neighboring state, accepting it if it’s better. This introduces randomness, helping escape minor plateaus.

3. Random Restart Hill Climbing

Executes multiple hill climbs from different initial solutions to avoid local maxima.

Applications of Hill Climbing in AI

Hill climbing, a cornerstone local search algorithm in artificial intelligence, is widely used to tackle optimization problems where finding an exact solution is computationally impractical. Its simplicity, speed, and adaptability make it a go-to choice across industries. Below, we explore its most impactful applications, complete with examples and actionable insights.

1. Traveling Salesman Problem (TSP)

Objective: Find the shortest possible route that visits a set of cities and returns to the origin.

How Hill Climbing Works:

- Initial Solution: Generate a random route.

- Operator: Swap two cities to reduce total distance.

- Objective Function: Minimize route length.

2. Machine Learning Hyperparameter Tuning

Goal: Maximize model accuracy by optimizing hyperparameters (e.g., learning rate, batch size).

Implementation:

- Start with an initial solution (e.g., default parameters).

- Apply operators: Adjust one hyperparameter at a time.

- Evaluate the new state using validation accuracy.

3. Automated Scheduling

Use Cases: Employee shift planning, project deadlines, or manufacturing workflows.

Process:

- Objective Function: Minimize conflicts or maximize resource utilization.

- Operators: Swap tasks, extend deadlines, or reassign resources.

4. Robotics Path Planning

Challenge: Navigate robots through dynamic environments while avoiding obstacles.

How It Helps:

- Current State: Robot’s current position.

- Neighbors: Possible moves (e.g., forward, left, right).

- Objective Function: Minimize distance to the goal while avoiding collisions.

Advantages and Limitations

Problems Faced by the Hill Climbing Algorithm in AI

The hill climbing algorithm is a powerful method for optimization, but like many learning algorithms, it has built-in challenges that can affect its overall performance.

1. Local Maxima

Problem:

A local maximum is a suboptimal solution that appears better than all its immediate neighbors but isn’t the global best. Hill climbing moves upward and gets stuck here, unable to explore beyond.

2. Plateaus: The "Flatland Dilemma"

Problem:

A plateau is a flat region where all neighboring states yield the same objective function value. The algorithm cannot decide which direction to move, leading to stagnation.

3. Ridges: The "Narrow Path" Problem

Problem:

A ridge is a sequence of local maxima that are not directly adjacent. Hill climbing struggles here because moves are restricted to immediate neighbors, making upward progress difficult.

4. Dependence on the Initial Solution

Problem:

The algorithm’s success heavily depends on the initial solution. A poor starting point can lead to convergence at a suboptimal state.

5. No Backtracking: The "One-Way Path"

Problem:

Hill climbing lacks memory of past states. Once it moves to a new state, it cannot revert, even if a previous state offered better long-term potential.

6. Neighbor Selection Trade-offs

Problem:

- Small Neighborhoods: Risk missing better solutions outside the immediate vicinity.

- Large Neighborhoods: Increased computational cost.

7. Exploration vs. Exploitation Imbalance

Problem:

Hill climbing prioritizes exploitation (choosing the best immediate move) over exploration (searching new regions). This limits its ability to discover global optima.

Overcoming Local Maxima: 5 Actionable Strategies

- Random Restarts: Escape plateaus by restarting from new initial solutions.

- Simulated Annealing: Introduce probabilistic acceptance of worse states (inspired by metallurgy).

- Hybrid Algorithms: Combine hill climbing with genetic algorithms for diversification.

- Adjust Operators: Modify how you apply to the current state (e.g., larger “jumps”).

- Noise Injection: Temporarily relax the objective function to explore new regions.

Hill Climbing vs. Simulated Annealing

Best Practices for Implementing Hill Climbing

- Choose the Right Variant: Use steepest ascent for precision, stochastic for speed.

- Optimize the Objective Function: Ensure it accurately reflects the problem’s goals.

- Monitor Plateaus: Track progress to detect stagnation early.

- Combine with Other Algorithms: Pair with Tabu Search or genetic algorithms for robustness.

Conclusion

Hill climbing in AI remains a powerful yet simple approach for solving optimization problems. Despite challenges like getting stuck in local maxima, techniques such as random restarts, simulated annealing, and hybrid models can significantly improve outcomes. By mastering the types, applications, and limitations of hill climbing in AI, developers and researchers can create smarter, more efficient AI systems for real-world use cases.

FAQ: Hill Climbing in AI

What is hill climbing in AI?

Hill climbing is a heuristic search algorithm that iteratively moves toward better solutions by selecting the best neighboring state at each step, aiming to maximize (or minimize) an evaluation function.

How does hill climbing work?

You start with an initial state, evaluate its neighbors, and move to the neighbor with the highest (or lowest) value. Repeat until no further improvement is possible.

What are the advantages of hill climbing?

It is simple to implement, requires little memory, works fast for certain problem types, and can be effective for local optimization.

What are limitations or disadvantages of hill climbing?

It can get stuck in local optima, plateaus, or ridges, and may fail to find the global optimum. It also depends heavily on initial state selection.

What variants of hill climbing exist?

Variants include stochastic hill climbing (randomly choose among better moves), first-choice hill climbing, and random-restart hill climbing (restart from new initial points to avoid local maxima).

In what AI problems is hill climbing used?

It is often used in scheduling tasks, optimization problems, route planning, game AI, and solving combinatorial puzzles like the n-queens problem.

.avif)

.avif)

.avif)