Are you curious to know why is controlling the output of Generative AI systems important? Well, this step is very critical for maintaining reliability, safety, and business trust in production environments. Here is everything you need to know about it!

The generative AI systems include large language models (LLMs), image synthesis engines, along with multimodal generators. All these are very rapidly transforming content creation in almost all organizations. They are helping organizations in automating workflows while supporting them in making quick decisions.

But, you cannot deny the fact that the outputs of generative AI are not always reliable, unbiased, or safe. So, if you don’t use any effective control mechanisms, these systems can produce serious errors and misleading information. Along with this, the content generated by them can also be socially unacceptable. All this can thus result in undermining user trust and limiting enterprise-ready AI adoption.

In this article, you will uncover why controlling the output of generative AI systems is critical. We have used up-to-date research and industry insights to outline the key risks and mitigation strategies relevant for enterprises as well as responsible AI practitioners.

What Does “Controlling the Output of Generative AI” Mean?

When we talk about controlling AI output, then it refers to the different mechanisms and strategies that are adopted to make sure that the generated results are:

- Accurate,

- Safe,

- Consistent, and

- Aligned with organizational values and regulatory standards.

Therefore, controlling the output here doesn’t mean only prompting, it rather goes beyond that. It includes frameworks of governance, mitigation of biases, assurance of quality, along with real-time filtering.

How Output Control Ensures Reliability and Safety?

Controlling the AI generated output is important for reliability and safety because it helps in achieving the following:

1. Reducing Hallucinations and Inaccurate Outputs

One of the most significant issues with generative AI is hallucination. It simply refers to the tendency of AI to generate incorrect or fabricated information that may sound plausible. For instance, AI can provide you with inaccurate documents or fake news articles that may look realistic but are totally false. This is not merely speculative as AI models actually generate text based on patterns instead of facts. This is why they can easily produce false information.

Let’s now look at the possible consequences of uncontrolled hallucinations. These include:

- Misinformation spreading in the critical domains including healthcare, legal, and finance domains.

- Bad decisions by businesses, using false AI-generated data.

- Decline in the trust of people in Artificial Intelligence systems over time.

Therefore, uncontrolled hallucinations can result in factual errors, financial losses and safety threats too as they can also lead to misdiagnosis in healthcare settings. The loss of trust in users will lead to decline in the engagement and adoption rates for AI.

2. Preventing Unfair Consequences and Inequality

Generative AI inherits patterns from training data that can possibly reflect societal biases. If not controlled, this can produce outputs that unfairly stereotype groups and perpetuate discrimination. Plus, they can also favor one demographic over another.

So, this is critical for reducing the ethical risks involved as AI decisions may be unfair or discriminatory. Also, this will help in regulatory compliance.

3. Preventing Harmful or Offensive Content

If generative AI content remains unfiltered, it can generate deepfakes, hate speech, and other harmful content. This can harm the users both socially as well as psychologically. As a result, it will put companies at legal, reputational, and operational risk.

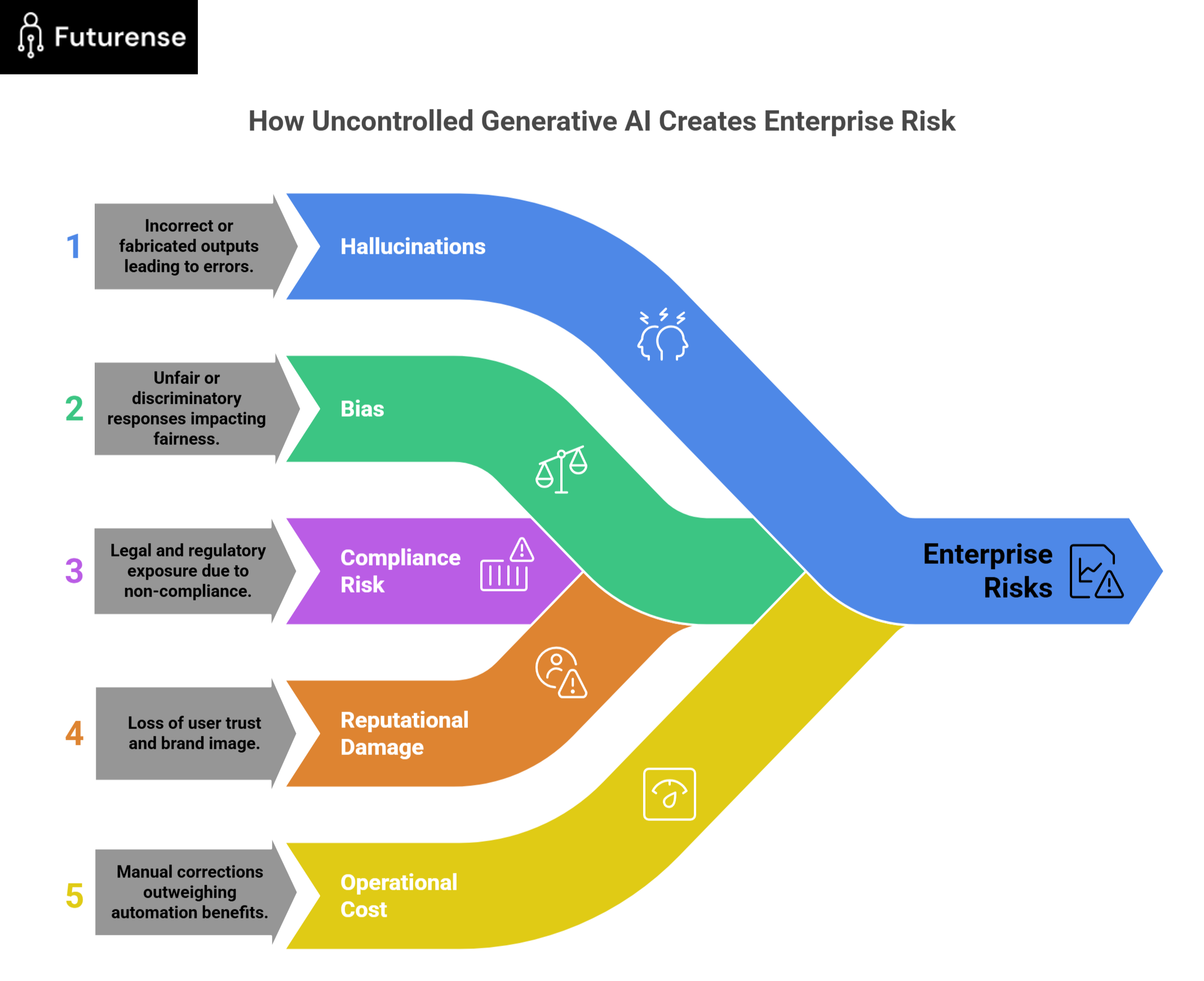

What are the Risks of Uncontrolled AI Adoption for Businesses?

For enterprises, the stakes are really high. The key enterprise risks without output control are given in the table below:

Key Dimensions For Output Control

In order to manage generative AI effectively, organizations should consider the following control layers:

- Ethical Guidelines: The establishment of guidelines and principles for the ethical use of AI.

- Bias Detection and Fairness Checks: It involves the implementation of tools and workflows that can monitor bias metrics and evaluate outputs for demographic fairness.

- Transparency and Explainability: If there is an understanding of why an AI made a specific choice, it will add accountability while supporting governance.

- Reliability and Accuracy: The use of curated datasets, fact-checking systems, and retrieval-augmented methods helps in anchoring the responses of AI in verifiable information.

- Human Oversight: Human involvement in reviewing and approving the AI generated content is crucial as it acts as an important safety net for catching errors and contextual misalignment. This gap between AI experimentation and real-world deployment is why many enterprises now rely on forward-deployed AI engineers

Best Practices for Controlling AI Output

Some of the best actionable strategies that enterprises can adopt to aim at enterprise-ready AI output control are given below:

- Establish guardrails: Guardrails with custom filters and policy-based constraints can help enterprises in controlling the AI output.

- Use of high-quality training data: If enterprises use unbiased good quality data, then it will help reflect the intended scope.

- Deployment of continuous data evaluation strategies: With real-time monitoring of outputs, data controlling will become effective. Enterprises can also implement retrieval-augmented generation (RAG) for fact grounding.

- Include human-in-the-loop (HITL): Human reviews are essential for catching errors in the critical content.

- Adversarial Training: It refers to training the AI to identify and reject harmful or misleading outputs.

Final Words

By now, you might have understood well the answer to the question: why is controlling the output of Generative AI systems important? No doubt, generative AI offers you transformative potential, but if you ignore output controlling then the consequences can be very harmful.

So, if you want to avoid misinformation, bias, legal liability, and loss of user confidence then you should remember that controlled AI directly refers to trusted AI. Thus, controlling output is not just a technical best practice, it is rather foundational. It is necessary for responsible, enterprise-ready AI adoption.

By combining governance frameworks, bias mitigation, and human oversight, you as well as your company can unlock AI’s value while minimizing risks.

FAQ: Output Control of GenAI System

Why is controlling the output of generative AI systems important?

Controlling generative AI output is important to prevent hallucinations, bias, unsafe content, and compliance risks, especially in enterprise and high-impact use cases.

Why is it important to verify the outputs of generative AI systems?

Verification ensures AI outputs are accurate, factual, and reliable, reducing the risk of misinformation, poor decisions, and loss of user trust.

How can we control the output of generative AI?

Generative AI output can be controlled using guardrails, human-in-the-loop reviews, bias checks, retrieval-based grounding, monitoring, and governance frameworks.

What is the best way to improve the output of generative AI?

The best way to improve generative AI output is by using high-quality data, clear prompts, continuous monitoring, feedback loops, and human oversight.

What is the control sysem in AI?

A control system in AI is a set of rules, feedback mechanisms, and oversight processes that guide model behavior to produce safe, reliable, and aligned outputs.

What are the four types of controllers?

The four common types of controllers are: Proportional (P) Integral (I) Derivative (D) PID (Proportional–Integral–Derivative)

.avif)

.avif)